From Snail Mail to Warp Speed: How Hubtel Rocketed Its Product Delivery

February 18, 2025 | 7 minutes read

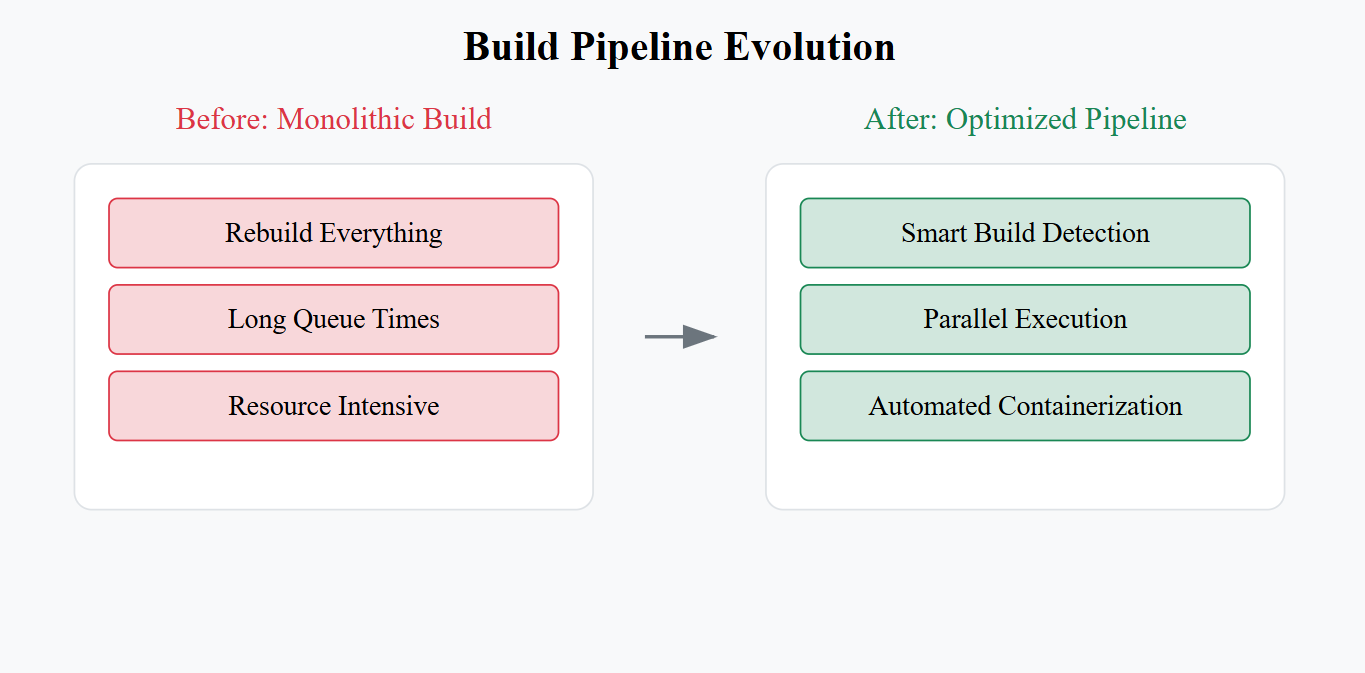

Remember when dial-up was the only way to connect to the internet? Slow, painful, and enough to make you throw your computer out the window? That’s what our software builds felt like at Hubtel for a while.

Our codebase was exploding like a teenager’s growth spurt, and our build pipelines, which used to be pretty snappy, were suddenly resembling rush hour traffic on a Friday night.

Instead of popping champagne for new features, we were tapping our fingers impatiently, waiting for builds to crawl across the finish line. Talk about buzzkill.

Our once-smooth process had become a tangled mess of resource crunches, technical hiccups, and enough waiting time to make a sloth look speedy.

Imagine this: every time we tweaked something, even a tiny thing, we were rebuilding the entire digital city, even if we’d only moved a single lamppost. Clearly, something had to be done.

READ ALSO: Beyond Instinct: How the Code Confidence Index (CCI) is Transforming Code Quality at Hubtel

The Roadblocks: Where We Hit Snags

Resource Constraints & Costs: Think of scaling build pipelines like hosting a massive potluck. You want enough food for everyone, but you don’t want to end up with mountains of leftovers and a hefty grocery bill. We needed a smarter way to feed our builds without breaking the bank.

Queue Times & Delayed Builds: Picture a Black Friday sale, but for code updates. With multiple teams pushing changes constantly, our build queues became a bottleneck of epic proportions. Developers were left twiddling their thumbs, releases were getting pushed back, and frustration levels were reaching boiling point.

Mono-Repository Woes: Mono-repos are like giant filing cabinets – great for keeping everything organized, but a pain to search through. Unlike smaller, more focused repositories, our massive mono-repo meant we had to analyze everything, even for minor changes. It was like searching for a single grain of sand on a beach.

Technical Hurdles: Beyond just throwing money at the problem (which, let’s face it, we didn’t have an endless supply of), we faced some serious technical challenges. Optimizing selective builds and automating dependency tracking required a serious overhaul of our build scripts.

Our Game Plan: How We Turned It Around

Instead of just adding more servers (because who has a money tree growing in their backyard?), we decided to get clever. We focused on automation, intelligent build detection, and resource optimization to transform our pipelines into high-speed bullet trains.

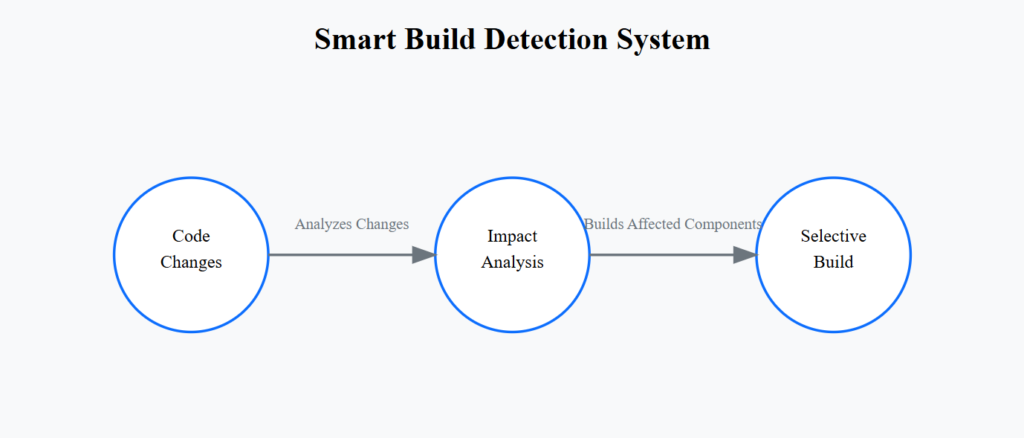

1. Smart Build Detection: We built a system that could pinpoint exactly which parts of our code were affected by any changes. Think of it as a Sherlock Holmes code. This involved creating a kind of map of our codebase, understanding the relationships between different parts of the system.

Then, when a change was made, our system could trace its impact, identifying only the areas that needed to be rebuilt. This meant we only had to rebuild what was absolutely necessary. No more rebuilding the whole city for a single lamppost!

2. Selective Project Builds & Publishing: Our pipeline now intelligently detects changes and only builds and publishes the relevant projects.

This drastically reduces build times and eliminates wasted effort. We essentially taught our system to be a discerning chef, only preparing the dishes that were actually needed. This involves a few key steps:

a. Change Detection: Our system analyzes the code changes and identifies the specific files that have been modified.

b. Project Mapping: It then uses a map of our project dependencies to determine which projects are affected by those changes. Think of it like a spider web, where each project is a node, and the connections represent dependencies. When one node is touched (a file is changed), the system can trace the ripples to see which other nodes (projects) are also affected.

c. Dependency Tracking: It goes a step further, understanding which projects depend on the affected projects. This ensures that even if a project itself hasn’t changed, if a project it relies on has, it will still be included in the build.

d. Selective Building: Finally, it uses this information to build only the necessary projects and their dependencies, skipping everything else.

e. Commit Message Influence (Targeted Builds): But we took it a step further! Developers can now directly influence the build pipeline using their commit messages. This is incredibly useful when a developer knows exactly which projects need to be built and wants to bypass the automatic dependency detection.

For example, if a developer makes a small, isolated change and wants to quickly deploy just that one piece, they can add a special tag or keyword to their commit message to specify the exact projects to build.

This gives developers fine-grained control and further optimizes the build process, allowing them to focus on precisely what needs to be released, instead of relying solely on the system’s automated dependency analysis.

This “targeted build” feature is like giving developers a “fast pass” for their builds, allowing them to jump the line when they know exactly what they need.

Build Pipeline comparison

3. Automated Code Quality Checks: Every new pull request now gets a thorough code quality check-up, ensuring we maintain high standards without slowing things down.

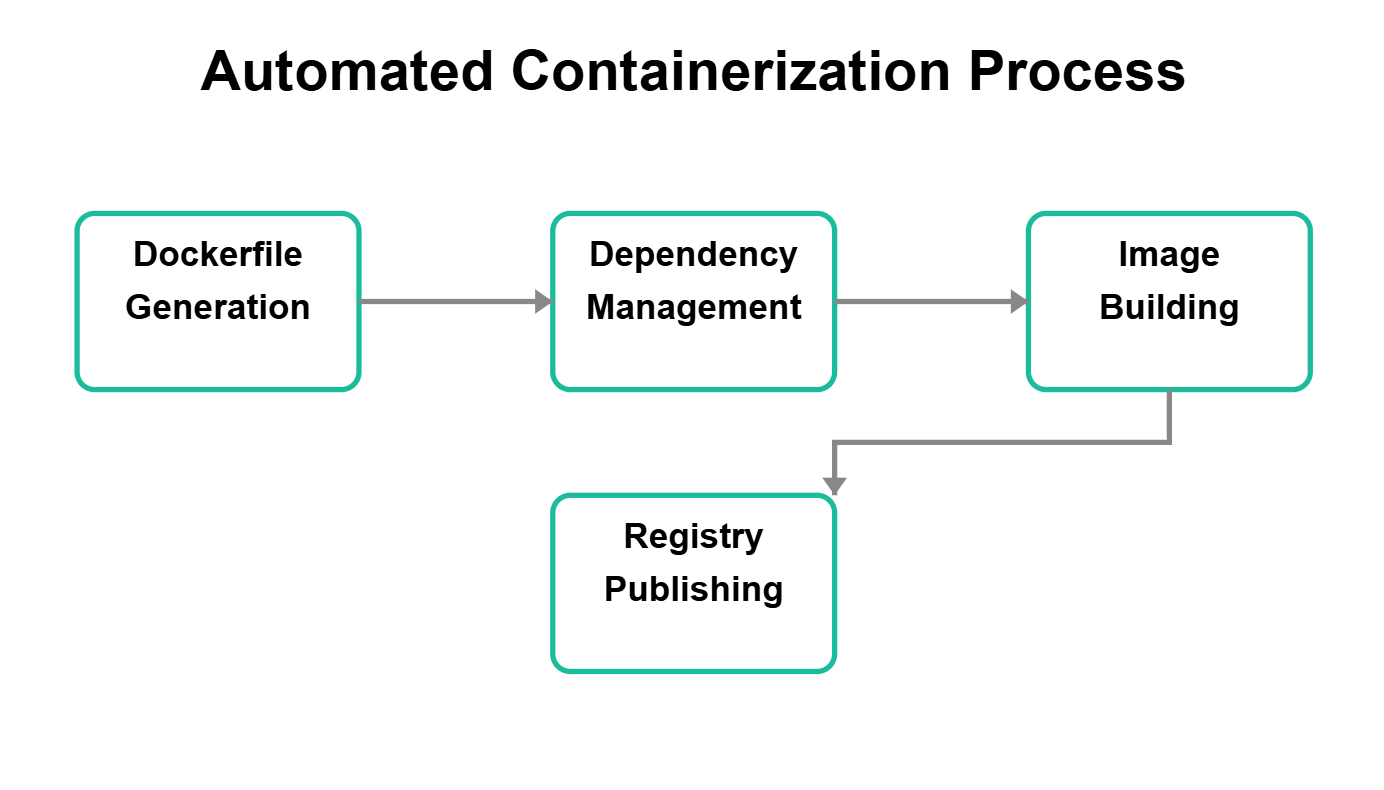

4. Automated Containerization: Say goodbye to manual Dockerfile management! Our pipeline now automatically containerizes our applications, making deployments smoother than a hot knife through butter. This involved automating the entire containerization process:

a. Dockerfile Generation: Our system automatically generates Dockerfiles for each project, based on its dependencies and configuration. It’s like having a master chef who knows exactly what ingredients and instructions are needed for each dish. These Dockerfiles define the environment in which our applications will run.

b. Dependency Management: The generated Dockerfiles include instructions for installing the necessary dependencies, ensuring that each container has everything it needs to run.

c. Image Building: Our pipeline then automatically builds Docker images from these Dockerfiles. These images are like packaged versions of our applications, ready to be deployed.

d. Registry Publishing: Finally, these images are automatically pushed to container registries (like Amazon ECR or GitHub Container Registry), making them readily available for deployment.

Automated Containerization Process

5. Dynamic Resource Allocation: We built a system that automatically scales our computing power up during peak build times and scales it back down when things are quieter.

This ensures we have the resources we need without wasting money. It’s like having a thermostat for our builds, keeping the temperature just right.

The Payoff: Faster, Smoother, and More Scalable Builds

The results? Let’s just say they were pretty impressive. Build times plummeted, queue times were slashed, and we significantly optimized our resource usage, saving a considerable chunk of change.

Developers went from dreading build times to getting feedback almost instantly. It was like going from snail mail to warp speed!

Lessons Learned: Smarter, Not Harder

Our journey taught us a valuable lesson: optimizing build pipelines isn’t about throwing money at the problem; it’s about being smart, innovative, and always striving to improve. Through automation, selective builds, and dynamic resource management, we’ve built a system that’s not just good – it’s awesome.

At Hubtel, we’re not resting on our laurels. We’re constantly looking for new ways to make our builds even faster and more efficient. Think of us as software chefs, always experimenting with new ingredients and techniques to create the ultimate recipe for speed and efficiency.

So, if your builds are feeling a bit sluggish, take a page from our book. With the right strategies, even the most complex build systems can be transformed into lean, mean, speed machines that fuel innovation and seamless product delivery.

Authors

Whitson Dzimah

Lead Engineer, Continuous Integration at Hubtel

Related

Hubtel Attains ISO 27001:2022 Certification

February 24, 2025| 2 minutes read

Beyond Instinct: How the Code Confidence Index (CCI) is Transforming Code Quality at Hubtel

February 24, 2025| 6 minutes read

From Snail Mail to Warp Speed: How Hubtel Rocketed Its Product Delivery

February 18, 2025| 7 minutes read